Overview

Autonomous spacecraft promise to revolutionise future missions by enabling vehicles to operate with limited human input. But with this increased independence comes vulnerability—particularly to subtle cyberattacks that can manipulate AI decision-making.

Researchers at City St George’s, University of London, have developed a novel AI-based detection system to spot stealthy adversarial attacks on spacecraft vision systems—protecting critical guidance and control functions from malicious interference. This work, led by Professor Nabil Aouf in collaboration with the European Space Agency (ESA), represents a major step towards resilient space autonomy.

The Challenge the Research is Responding To

Modern spacecraft increasingly rely on AI vision systems to interpret their environment—whether navigating space debris, docking with satellites, or forming constellations. These systems process camera images to make real-time decisions about movement and positioning.

However, AI systems are not immune to manipulation. A specific type of threat—adversarial image perturbations—involves making small, nearly invisible changes to visual input that cause an AI model to make incorrect predictions. In the context of space, this could mean a spacecraft misjudging its orientation or trajectory, potentially leading to mission failure or collision.

The challenge is twofold:

• Detect these imperceptible attacks in real time, without slowing down mission-critical operations.

• Explain how and why the AI is being fooled—so that future systems can be hardened against such attacks.

Partnerships Involved

This cutting-edge research was made possible through collaboration between City St George’s, University of London Autonomy of Systems Research Centre, the European Space Agency (ESA) – contributing domain expertise in spacecraft systems, and input from the fields of AI security, machine learning, and robotics. The partnership enabled rigorous simulation and real-world testing, drawing on expertise across aerospace engineering, cybersecurity, and AI interpretability.

Our Research

Led by Professor Nabil Aouf, the project team developed a multi-layered approach combining AI, simulation, and explainability:

• Virtual mission modelling: A simulated environment was created where a “chaser” spacecraft navigates towards a satellite (Jason-1) using onboard AI and camera vision.

• Adversarial testing: Subtle attacks were generated using established techniques (e.g. Fast Gradient Sign Method), designed to trick the AI’s image processing model without being visually detectable.

• AI detection pipeline: A neural network trained to estimate the spacecraft’s relative pose was enhanced with SHAP (SHapley Additive exPlanations)—a method that reveals which parts of the image the AI uses to make decisions.

• Temporal analysis: To spot inconsistencies over time, the team introduced a Long Short-Term Memory (LSTM) model, which detects patterns in the AI’s reasoning that suggest the presence of an attack.

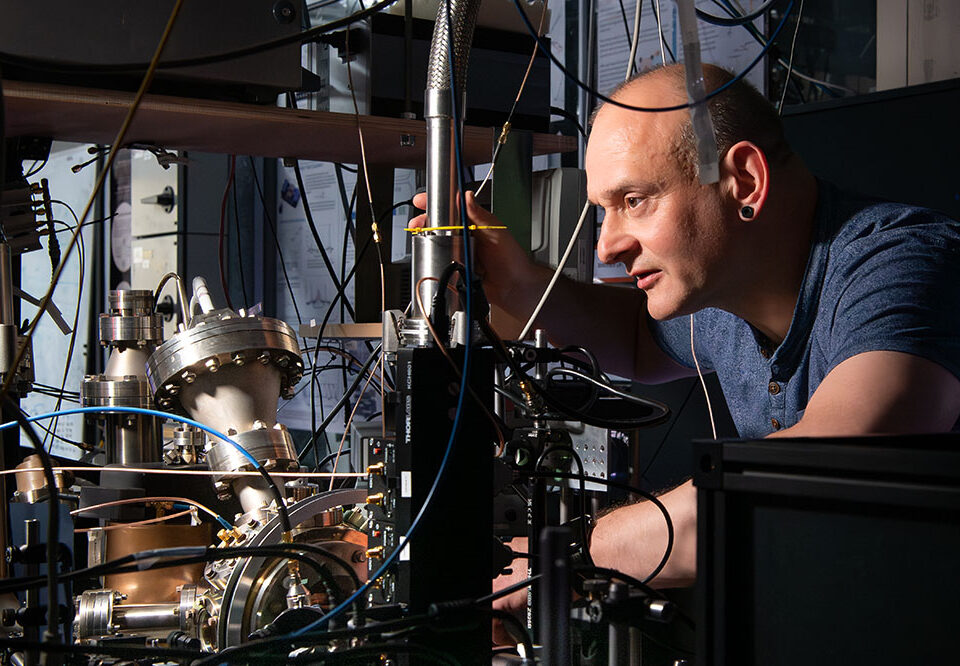

• Real-world validation: The system was tested not only in simulation but also on scaled physical models, using real camera optics to replicate operational challenges.

Our impact

The research has produced a powerful method to detect and understand cyberattacks on autonomous spacecraft—combining high accuracy with real-world relevance:

• 99.2% detection accuracy in simulation, and 96.3% on real-world test imagery

• Improved visibility into how AI models “think”, helping engineers debug or secure future space systems

• A modular framework that can be integrated into spacecraft guidance, navigation, and control (GNC) systems

• Demonstrated viability of explainable AI (XAI) techniques in critical aerospace applications

• Contributed to ESA’s ongoing efforts to make autonomous systems cyber-secure by design.

This work not only strengthens the security of autonomous spacecraft but sets a precedent for applying trustworthy AI principles in other high-stakes environments—such as drones, autonomous vehicles, and smart energy systems.